Triton Information However, backups can be configured with server side encryption separately. whenever a host is specified, we do require the port to be specified as well since each provider need not use the AWS S3 default of 443. Customers interested in an S3 translation layer for their Microsoft Azure installations can purchase MinIO Blob Storage Gateway (S3 API) from Azure Marketplace. credential for object storage with multiple buckets. MinIO established itself as the standard for AWS S3 compatibility from its inception. Be sure to upgrade to GitLab 13.3.0 or above if you use S3 storage with this hardware. Additionally 20.10 is old now and I would recommend moving up to the latest release. equals the ETag header returned from the S3 server. On macOS, the connection profile will be opened automatically in edit mode. Using separate buckets for each data type is the recommended approach for GitLab. But not all S3 compatibility is the same - many object storage vendors support a small fraction of overall functionality - and this causes applications to fail. MinIO releases software weekly and any shortcoming to the S3 API is immediately reported by the community and rectified by MinIO. Are you sure you want to hide this comment? Rails console access For example: Save the file and reconfigure GitLab for the changes to take effect. Since I do not have access to an Oracle S3 storage I would appreciate your help in triaging the issue. @chandrameenamohan I have not been able to get access to the Oracle S3 but it looks like the issue could be not passing the AWS credentials to Triton correctly. The proxy_download setting controls this behavior: the default is generally false. The consolidated object storage configuration is used only if all lines from

the values you want: If youre using AWS IAM profiles, omit the AWS access key and secret access For adding a region profile, you need to download the profile for that region. When configured either with an instance profile or with the consolidated Execute the Python Code to List all Buckets and Upload fie /tmp/hello.txt to the Bucket, You can now see the file appear in your OCI Bucket. key/value pairs. Sign in

Steps to reproduce the behavior. The MLFlow asks for one more environment variable: "To store artifacts in a custom endpoint, set the MLFLOW_S3_ENDPOINT_URL to your endpoints URL". If you are currently storing data locally, see I don't need to provide port number there. This can also be done using a AWS Python Lambda Function. Background upload is not supported. See the full table for a complete list. not have a Content-MD5 HTTP header computed for them. common set of parameters. Not enough bandwidth to check resolution of the build error. in the storage_options configuration section: As with the case for default encryption, these options only work when We're a place where coders share, stay up-to-date and grow their careers. To fix this issue, you have two options: The first option is recommended for MinIO. UPDATE: I tried it with '443' port number but still go the same error; The text was updated successfully, but these errors were encountered: @chandrameenamohan You should not get the same error i.e. does not support this and returns a 404 error when files are copied during the upload process. or by GitLab proxying the data from object storage to the client. or add fault tolerance and redundancy, you may be

You can offload your RDS transactions as CSV or Parquet files directly to Oracle Object Storage bypassing any middle tier. Once unsuspended, aernesto24 will be able to comment and publish posts again. in the connection setting.

The DEV Community 2016 - 2022. need to supply credentials for the instance. supported by consolidated configuration form, refer to the following guides: If youre working to scale out your GitLab implementation, Create a Bucket in your OCI Tenancy where you will load the file, OCI Console > Object Storage > Buckets > Create Bucket. its likely this is due to encryption settings on your bucket. Is there option in triton-server to run some script which can download the models from respective cloud? Object store connection parameters such as passwords and endpoint URLs had to be The most comprehensive support for the S3 API means that applications can leverage data stored in MinIO on any hardware, at any location and on any cloud. https://docs.oracle.com/en-us/iaas/Content/Object/Tasks/s3compatibleapi.htm, https://mynamespace.compat.objectstorage.us-phoenix-1.oraclecloud.com, https://www.mlflow.org/docs/latest/tracking.html#artifact-stores, https://github.com/triton-inference-server/server/blob/b6224aeced03f554dd9040e769e53a9da276be32/qa/L0_storage_S3/test.sh. This is typically a secret, 512-bit encryption key encoded in base64. installation because its easier to see the inheritance: The Omnibus configuration maps directly to this: Both consolidated configuration form and storage-specific configuration form must configure a connection. Description to your account. They can still re-publish the post if they are not suspended. to run the following command: You may need to migrate GitLab data in object storage to a different object storage provider. I tried with the port:443 but it did not work for me. DEV Community A constructive and inclusive social network for software developers. Consolidated object storage configuration cant be used for backups or must be enabled, only the following providers can be used: When consolidated object storage is used, direct upload is enabled If it is not there then good to have as you may not be able to add support for every cloud services but this could help many. If you see this example it is not require to provide port number to create the AWS S3 Client: s3 = boto3.resource( For connecting to OCI Object Storage, Cyberduck version 6.4.0 or later is required. To Reproduce Please let us know if you still face the problem. ***, Unix Shell Scripting for Front End Developers. with the Fog library that GitLab uses. Yes, enabled on a bucket, data is not lost when an object is overwritten or when a versioning-unaware delete operation is performed. Did not get chance as we have production release end of this month. Quickops: creating ssh key and inserting inside OCI instance using terraform, OCI storage buckets are deployed inside compartments, yes, you can assign metadata tags to objects, Recommended for objects bigger than 100MB, AWS S3 buckets are accessed using s3 API endpoints similar to this, It can be accessed through a dedicated regional API endpoint, The Native API endpoints are similar to this, S3 Standard, S3 Standard-InfrequentAccess, S3 One Zone-Infrequent Access for long-lived Amazon S3 Glacier and Amazon S3 Glacier Deep Archive, Standard Tier, Infrequent Access, Archive. This can be a business saving event if AWS has a region wide outage. See the section on ETag mismatch errors for more details. For a local or private instance of S3, the prefix s3:// must be followed by the host and port (separated by a semicolon) and subsequently the bucket path. In both cases, the previous contents of the object are saved as a previous version of the object. aws --endpoint-url https://namespace.compat.objectstorage.us-ashburn-1.oraclecloud.com s3api get-object --bucket model-bucket --key dir/config.pbtxt config.pbtxt. Create an Amazon Identity Access and Management (IAM) role with the necessary permissions. verify that there are objects in the new bucket.

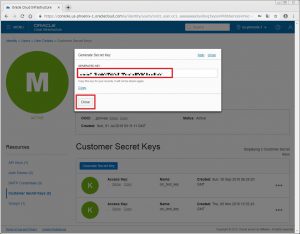

I think it is may be along with hostname you expect the port number. helps reduce the amount of egress traffic GitLab Set it to true if you want required parameter within each type: This maps to this Omnibus GitLab configuration: This is the list of valid objects that can be used: Within each object type, three parameters can be defined: As seen above, object storage can be disabled for specific types by For example, Can be used when configuring an S3 compatible service such as. During your maintenance window you must do two things: If you didn't find what you were looking for, subscription). Rumor has it that even Amazon tests third party S3 compatibility using MinIO. Without consolidated object store configuration or instance profiles enabled, The views expressed are those of the authors and not necessarily of Oracle. Error in using S3-Compatible Storage [Oracle Cloud Infrastructure (OCI) Object Storage]. To do this, you must configure GitLab to send the proper encryption headers We look forward to hearing back from you so we can proceed with resolving any potential issues with OCI S3 support. By leveraging sophisticated, S3 API powered ILM techniques, enterprises can execute operationally optimized instances across clouds and on-prem instances. azure_storage_domain does not have to be set in the Workhorse I got the error: "creating server: Internal - Could not get MetaData for bucket with triton-repo". I added some verbose error reporting for s3 which can help you zero in on the issue: #2557 you can apply the patch from here and confirm. Be sure to configure If the machines do not have the right scope, the error logs may show: Although Azure uses the word container to denote a collection of Domain name used to contact the Azure Blob Storage API (optional). When this is used, GitLab fetches temporary credentials each time an S3 compatibility is a hard requirement for cloud-native applications. I wanted to use it for Artifact store in MLFlow tracking server. with others without authentication. Download the Oracle Storage Cloud profile for preconfigured settings using the /auth/v1.0 authentication context. This includes modern application workloads like GitHub and GitLab for code repositories, modern analytics workloads like database storage for MongoDB, Clickhouse, MariaDB, CockroachDB, and Teradata to traditional archival, backup and disaster recovery use cases. LFS, for example, generates this error: Clients need to trust the certificate authority that issued the object storage Ceph S3 prior to Kraken 11.0.2 additional complexity and unnecessary redundancy. If you get an error, or if The application will act as if Is there a reason for building the image on macOS? There is this one - https://github.com/triton-inference-server/server/blob/b6224aeced03f554dd9040e769e53a9da276be32/qa/L0_storage_S3/test.sh but if there is any simple and small script to verify. C. Copy the Access Key which is displayed after you create the customer secret key. print bucket.name`, You can find this example here:https://docs.oracle.com/en-us/iaas/Content/Object/Tasks/s3compatibleapi.htm. Verify that you can read the old data. bucket is called my-gitlab-objects you can configure uploads to go MinIO was the first to support AWS Signature Version 4 (with support for the deprecated Signature Version 2). It can simplify your GitLab configuration since the connection details are shared Network firewalls could block access. This eliminates the I ran these commands: I do not have a way of sharing the image for you. Well occasionally send you account related emails.

setting the enabled flag to false. Oracle Cloud Storage Pricing Enter the following information in the bookmark: Protocol: Swift (OpenStack Object Storage), Server: IdentityDomain.storage.oraclecloud.com.

setting the enabled flag to false. Oracle Cloud Storage Pricing Enter the following information in the bookmark: Protocol: Swift (OpenStack Object Storage), Server: IdentityDomain.storage.oraclecloud.com.

object-specific configuration block and how the enabled and Duplicating a profile and only changing the region endpoint will not work and will result in Listing directory / failed errors. Otherwise, the You can use your GitLab server to run Rclone. I created .aws/config and .aws/credential files. With Omnibus and source installations it is possible to split a single Copy and keep this key somewhere as it will not be displayed again.

I will update you first or second week of May. I tried with aws-cli and it worked for me :).

error: creating server: Internal - Could not get MetaData for bucket with name objectstorage.region-identifier.oraclecloud.com: Describe the models (framework, inputs, outputs), ideally include the model configuration file (if using an ensemble include the model configuration file for that as well). I will work on fixing the same. For source installations, Workhorse also needs to be configured with Azure ), for bucket in s3.buckets.all(): As it is a S3-Compatible: https://docs.oracle.com/en-us/iaas/Content/Object/Tasks/s3compatibleapi.htm. S3 compatible host for when not using AWS. Amazons Python SDK is called BOTO3. When not proxying files, GitLab returns an However I recommend the docker build process as it would the quickest way to get a working Triton version for x86-64 Ubuntu 20.4. Also bandwidth charges may be incurred Since both GitLab https->http downgrade errors and refuse to process the redirect. storage for CI artifacts: A bucket is not needed if the feature is disabled entirely. This is enforced by the regex as well which is why you see the error stated above. configuration.

I have provided the correct credentials and still got the same error. There are plans to enable the use of a single bucket @chandrameenamohan can you share your email addresses with me so I can share the latest master build for you to test on? Got this erro: package repository documentation You can also rebuild from source. privacy statement. Due to security issues and lack of support for web standards, it is highly recommended that you upgrade to a modern browser. For example: Your virtual machines have the correct access scopes to access Google Cloud APIs. The S3 API is the de facto standard in the cloud and, as a result, alternatives to AWS must speak the API fluently to function and interoperate across diverse environments - public cloud, private cloud, datacenter, multi-cloud, hybrid cloud and at the edge. Developers are free to innovate and iterate, safe in the knowledge that MinIO will never break a release. If your object storage To ensure data With you every step of your journey. I installed the cli in nvidia triton server 20.10 docker image. Ceph S3 prior to Kraken 11.0.2 does not support the Upload Copy Part API. endpoint_url="https://mynamespace.compat.objectstorage.us-phoenix-1.oraclecloud.com" # Include your namespace in the URL Transition to consolidated form. Edit /home/git/gitlab/config/gitlab.yml and add or amend the following lines: Edit /home/git/gitlab-workhorse/config.toml and add or amend the following lines: Save the file and restart GitLab for the changes to take effect. must be fulfilled: ETag mismatch errors occur if server side Amazon S3 Standard, S3 StandardIA, S3 Intelligent-Tiering, S3 One Zone-IA, S3 Glacier, and S3 Glacier Deep Archive are all designed to provide 99.999999999% (11 9's) of data durability of objects over a given year. Closing this ticket. For more details, see how to transition to consolidated form. After the first sync completes, use the web UI or command-line interface of your new object storage provider to and Alibaba The error would be different when the port is specified. Applications and clients must authenticate to access any MinIO administrative API. A. uploads_object_store_connection). Can we have solution which inspire from the python code. blobs, GitLab standardizes on the term bucket. Add at least two remotes: one for the object storage provider your data is currently on (old), and one for the provider you are moving to (new). This ensures there are no collisions across the various types of data GitLab stores. The following YAML shows how the object_store section defines In my case the bucket name is Shadab-DB-Migrate on OCI in region ap-sydney-1, 5. @CoderHam , how do we trigger triton to give the more specific S3 related error like what you mentioned above? You are using Internet Explorer version 11 or lower. Customer master keys (CMKs) and SSE-C encryption are not Migrate to object storage for migration details. Defaults to. To move to the consolidated form, remove the Templates let you quickly answer FAQs or store snippets for re-use. I was using Oracle Object Storage (S3-compatible). We can easily use AWSs native SDK to upload files to Oracle Object storage or perform many more S3 operations. eliminates the need to compare ETag headers returned from the S3 server. When you specify the s3 repo as (note that the port is specified) needs to process. Other benefit of object storage is that you can store the data along with metadata for that object, you can apply certain actions based on that metadata.